If you want to study an organisation email is a rich source, but what have we learnt about the architecture of a solution that allows this analysis to be conducted? First let me caveat the solution I’ll describe: it was built for an enterprise that manages all of its own email with approximately 2000 users (nodes); now this is part of a larger organisation where we have some interest in connections between the enterprise and larger organisation, for this analysis there are approximately 10,000 nodes; if all emails available are included (including those from outside the larger organisation) then there are approximately 100,000 nodes, we have not performed analysis at this scale. Not all technologies suit all scales of analysis (for example NodeXL is really only effective on graphs with under 2000 nodes) so please bear this in mind for your own domain.

Step 1: Getting hold of the email: our example organisation uses Microsoft Exchange Server which allows the administrator to enable Message Tracking logs; these logs include the sender and recipient list for each email, the date and time is was sent and some other pieces of information. The logs will never include the content of the message but can be configured to include the subject of the message. Depending on your organisations security and/or privacy policies this could be contentious but useful if you can obtain it; I’ll be posting a follow-up entitled “Dealing with Sensitive Data” which describes how the message subject can be used whilst maintaining privacy.

Step 2: Somewhere to put it: ultimately a graph database (like Neo4j), or graph visualisation tools (like Gephi) are going to be the best way to analyse many aspects of the data. However I would recommend first loading the email data into a good old-fashioned relational database (I’ve used SQL Express 2012 for everything I describe here). Reasons for doing this are: (1) familiarity, it’s easy to reason about the data if you have a relational database background; (2) you can perform some very useful analysis directly from the database; (3) it allows you to incrementally load data (I’ve not found this particularly simple in Neo4j); (4) it’s easy to merge with other relational data sources. The structure I’ve discovered works best is as follows:

• “node” table: 1 row per email address consisting of an integer ID (primary key), the email address

• “email” table: 1 row per email with an integer email ID, the ‘node’ ID of the sender, the date and time.

• “recipient” table: an email can be sent to multiple recipients; this table has no unique ID of its own but instead has the “email” ID and the “node” ID of each recipient

The tables are shown below. Note some additional fields that I won’t go into now, the important ones are the first 3 in “email” and the first two in “node” and “recipient”

Step 3: Extract from Email Logs, Transform and Load into the relational database: Firstly you’ll want to open and read each email log-file; the Exchange message tracking logs repeat the information we are concerned with several times as they record he progress of each message at a number of points. I found the best way to get at the data I wanted was to find a line that contained an event-id of “RECEIVE” and source-context is not “Journaling” (you may not have Journaling in your files but probably worth excluding in case it gets enabled one day). The rest is pretty simple: create a “node” record for the sender-address if one does not already exist, create a new “email” record (the sender_ID is the “node” record just created/found) and then for each address in recipient-address create a “recipient” record using the email ID just created and then a new or existing “node” ID for each recipient.

Email logs can add-up to a lot of data so I’d advise loading them incrementally rather than clearing down the database and re-loading every time you get a new batch. This requires you consider how to take account of existing node records: you could do this with a bit of stored procedure logic but my approach was to load all the existing nodes into an in-memory hash-table and keep track that way.

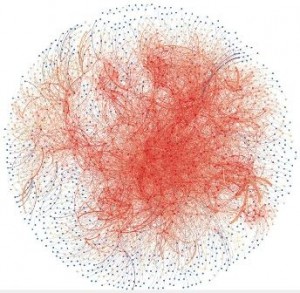

In part 2 I’ll explore how to get the relational representation into a graph.

Follow

Follow